Forecasting is mostly vibes. So is expertise

Surely you wouldn't make up an opinion?

People often say ‘forecasting is just vibes’.

Often, yes.

But so is expertise.

My argument goes as follows:

Much expertise is prediction

Predictions are often vibes

Vibes can have good track records

Let’s go through these one by one.

Much expertise is prediction

Often experts apply their knowledge in unfamiliar or uncertain situations. “How much will this policy decrease child poverty” “Will Biden leave in the next 3 days” “Will I feel better after taking painkillers?” “How much will sea levels rise?”

Consider the experts you see most often in your life. For me it’s political commentators, military buffs and science YouTubers. Whenever they say “He will probably…” or “I expect…” or “It seems likely that…” they are making predictions.

In this sense there is not some gap between experts and forecasters, everyone does a bit of prediction. Doctors are predicting the effects of treatment, political experts are guessing how groups will vote, generals are anticipating the moves of other nations.

This discussion often comes up in AI policy, how to trust forecasts vs. other kinds of statements. But there isn’t some privileged group who get to avoid doing prediction. Everyone is predicting the effects of AI on the economy, on our lives, on the human race. Some people think the effects will be big. Some people think they will be small.

There is no position on the future that doesn’t involve forecasts.

Predictions and expertise are vibes (and this is fine)

Look at this tweet from Brian Schwartz, a political finance reporter at CNBC1.

When Brian said that there were no plans for Biden to drop out, where did this opinion come from? Did he consult a big table in the back of his journalism book? Has he hacked every computer owned by the DNC? Was it revealed in a dream?

No. He made it up.

He sat down, considered the information and wrote this tweet, which matched his rough thoughts on the matter. I’m not saying this is bad. This is what all of us do when writing about something we aren’t certain of. Our brains consider different options and we try to find words that match the vibes on our heads.

Now if he were a forecaster, perhaps people would take more notice of the lack of a robust model or dataset and query why he is allowed to talk precisely about things, but he’s just a typical everyday expert so we expect him to come up with vibes like this all day.

And importantly, people will cite his vibes. They tweet “Brian Schwarz says” “According to Brian Schwarz at CNBC” “CNBC thinks”. These are typical things for people to say in response to the vibes-based thoughts of an expert.

Making things up isn’t bad. I want to hear people telling me how their expert internal model responds to new information. That is going to involve judgement calls, in there own heads. Where else could these statements come from? Are they engraved in the firmament? Nope. People are making them up.

I am tired of people acting like forecasters putting numbers on things are doing something deeply different from many other experts working in fields without deep reference classes or extremely clear models. Just as we take experts seriously because of their years of study, I want to be taken seriously because of my track record. I am good at predicting things.

In terms of AI discourse, there is a notion that AI Safety advocates are using numbers to launder their vibes, but everyone else, they really know stuff. So when people say to not regulate AI, have they seen 1000 civilisations develop this technology? Or do they understand LLMs well enough to predict the next token? Because otherwise, they are making stuff up as well.

To me, it’s vibes all the way down. Nobody knows what is going to happen, chance is the best language to discuss it in, to figure out how we disagree and try and figure out what’s going on.

Vibes can have good track records

My younger brother is a good judge of character. When he doesn’t like someone I recall him often being correct. He catches things I miss. Somehow this gut feeling relates meaningfully to the world.

Vibes can be predictive.

And if you want to know how predictive. Just start tracking them. Every time your friend says someone gives her the ick, note it down and a year later, look at the list. Were the people disreputable? Maybe her ‘vibes’ are in fact a good source of information about the world.

Forecasts are the same. They are not given legitimacy by having come down from the sky. They are given legitimacy by having a track record of being correct.

And this is true whether it’s Nate Silver’s forecasts or your dog barking at someone. Both might be signals, if we take the time to check.

The question here isn’t “can we trust forecasters because of something within themselves?” it is “do they have a history of getting questions like this right”

Sometimes in AI people say ‘AI isn’t like other fields, we can’t trust forecasts there’. I think this is wrong, for reasons I get to below. But even if it’s true I don’t think it gives the conclusion advocates think.

If AI can’t be predicted this applies to all experts, not just those I disagree with. We would have to proceed with no knowledge at all. And that would, in my opinion, call for a lot of caution. I think there is almost certainly no risk from GPT5-5 level models. If AI forecasting were impossible then I would want much more stringent regulation.

The issues with forecasting apply to other expertise

The upshot is that all solid arguments against forecasting apply issues with forecasting apply to other forms of expertise too.

To discuss AI specifically, people criticise AI forecasts for the following:

Being outside the forecasting horizon of 3-5 years

Lacking base rates

Lacking a robust model

Having order of magnitude disagreements between forecasters

And

Being too out of sample to use current track records

The first 4 of these are good arguments. These are reasons that forecasts are less good than we might want them to be. I wish we were better at long term forecasting, that there were easy base rates for AI and that forecasters and experts were more in agreement with one another.

But this isn’t reason to ignore forecasts. What endows other participants in the AI discourse to forecast 5 years ahead? Do they have reference classes to use? Do the order of magnitude differences mean we should ignore forecasts? Or do they point to uncertainty which we should take seriously, perhaps which is harder to spot without quantitative forecasts.

The final argument (“out of sample”) seems wrong to me. I don’t see why track records in geopolitics, pandemics and technological development shouldn’t transfer to x-risk. These aren’t tiny percentages we are talking about. If someone says there is a 1% chance, they may have forecast that enough times to be well calibrated. We can forecast milestones and see who has a good track record.

You have to check the track records

The actual issue here, I think, is that some people see forecasts as a proxy for good track records. They see someone using numbers and assume that because they are doing so, the norm is to treat their forecasts with respect.

Do I think Yudkowsky is a good forecaster? No. Show me his track record. I haven’t seen it. I don’t think I’ve seen the good forecasting track record of anyone with a P(doom) over 50% though I guess there are a few.

538, a news publication that does sports and US election forecasting, has this track record page. But it turns out that these forecasts were made using Nate Silver’s forecasting model and he’s since left. It’s stolen valour!

To me, there is a similar issue within forecasting in AI. Just because someone gives a number does not mean you should treat them like they are a Superforecaster. I am grateful to anyone who is precise enough to use numbers, but if someone gives me a P(doom) number, before I take it at all seriously, I want to know how well-calibrated they are in general. I might ask questions like:

Are they a Superforecaster™? It’s pretty hard to become one, so it’s a good signal

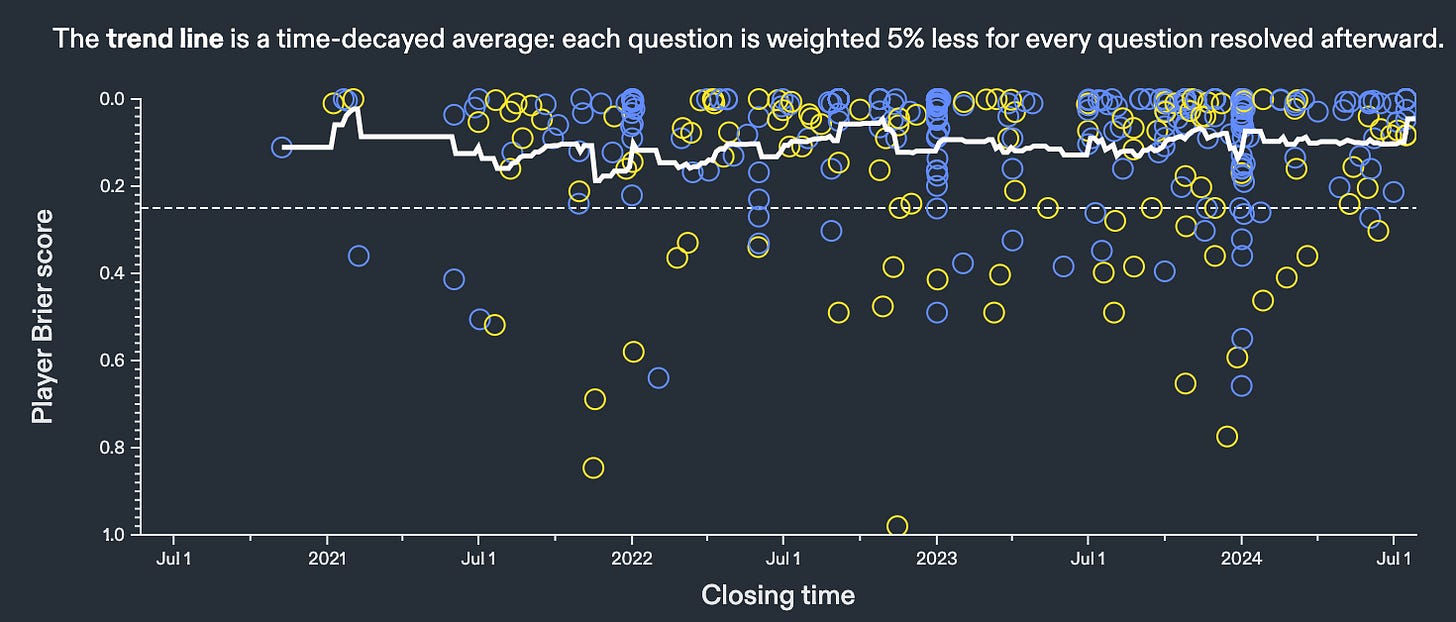

Do they use Metaculus? What’s their placement like in the leaderboard

Do they use Manifold or Polymarket? What’s their profit like? Is it across many serious markets or one big/insider bet?

Have they recorded personal forecasts, ideally publicly?

To try and empathise with people who criticise forecasting, if I thought that someone giving a number meant that policy experts should listen to then, I’d be annoyed that that was the norm too. That’s way too cheap.

If someone is giving a forecast, ask them for their track record.

And these track records are fine for forecasting AI. There are already many questions on milestones we can use to figure out who understands what is going on. The key issue is that many people don’t actually have such track records and so I happily take them much less seriously. If no one ever told you this, I am genuinely sorry that the forecasting community has communicated badly on this point.

Forecasting is the worst way of predicting the future, except for all the others

Forecasting is mostly vibes and that is okay. Because so is expertise. Experts too are making up statements, from their own inscrutable models. I don’t consider numbers to be more accurate, just more precise.

The question then, for both forecasters and experts is “should we trust them?”. And for forecasters the way to differentiate is through looking at public track records. This is, in fact, the whole thing. Personally, I’d like us to interrogate all experts track records more closely.

So next time you see a forecast, don’t throw the baby out with the bathwater, hear them as presenting their own vibes on the question and ask “what’s your track record?”

I know nothing about this man, I just found an expert talking about biden staying in.

It's really a dim view of humanity to treat experts as black boxes who spit out predictions and can be judged by Brier Score ... And not even MENTION the possibility that one could ask people why they believe what they believe and try to figure out whether that reasoning is sound. :-P

What's the Brier score of Darwin? Or Einstein? If someone says AGI is impossible because silicon chips lack Godly souls, am I obliged to give them some deference if their Metaculus profile is sufficiently impressive?

COI: I don't have a forecasting track record :-P

Typo: "no risk from GPT5-5 level models"