Is EA prepared for an influx of new medium-sized donors?

On missing infrastructure

Let’s imagine a story.

I am a mid- to upper-ranking member of OpenAI or Anthropic.

I offload some of my shares and wish to give some money away.

If I want to give to a specific charity or Coefficient, fair enough.

If I am so wealthy or determined that I can set up my own foundation, also fair enough.

What do I do if I wish to give away money effectively, but don’t agree with Coefficient’s processes, and haven’t done enough research to have chosen a specific charity?

Is EA prepared to deal with these people?

Notably, to the extent that such people are predictable, I feel like we should have solutions before they turn up, not after they turn up.

What might some of these solutions look like, and which of them currently exist?

Things the Currently exist

Coefficient Giving

It’s good to have a large, trusted philanthropy that runs according to predictable processes. If people like those processes, they can just give to that one.

Manifund

It’s good to have a system of re-granting and such that wealthy individuals can re-grant to people they trust to do the granting in a transparent and trackable way.

The S-Process

The Survival and Flourishing Fund has something called the “S-process” which allocates money based on a set of trusted regranters and predictions about what things they’ll be glad they funded. I don’t understand it1, but it feels like the kind of thing that a more advanced philanthropic ecosystem would use. Good job.

Things that don’t exist fully

A repository or research (~EA forum)

The forum is quite good for this, though I imagine that many documents that are publicly available (Coefficient’s research etc) are not available on the forum and then someone has to find them.

A philanthropic search/chatbot

It seems surprising to me that there isn’t a winsome tool for engaging with research. I built one for 80k but they weren’t interested in implementing it (which is, to be clear, their prerogative).

But let’s imagine that I am a newly minted hundred millionaire. If there were Qualy, a well trusted LLM that would link to pages in the EA corpus and answer questions, I might chat to it a bit? EA is still pretty respected in those circles.

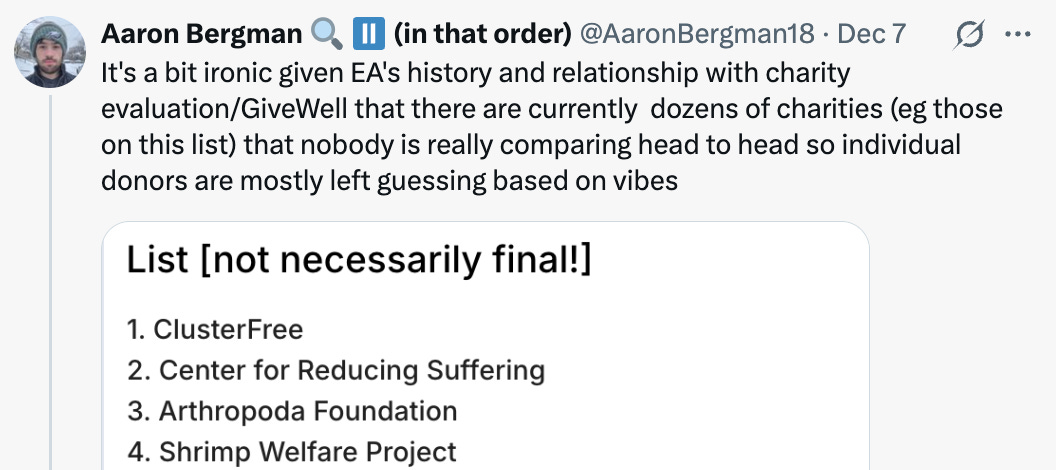

A charity ranker

As Bergman notes, there isn’t some clear ranking of different charities that I know of.

Come on folks, what are we doing? How is our wannabe philanthropist meant to know whether they ought to donate to AI, shrimp welfare or GiveWell. Vibes?2

I am in the process of building such a thing, but this seems like an oversight.

EA community notes

Why is there not a page with legitimate criticisms of EA projects, people and charities that only show if people from diverse viewpoints agree with them.

I think this one is more legitimately my fault, since I’m uniquely placed to built it, so sorry about that, but still, it should exist! It is mechanically appropriate, easy to build and the kind of liberal, self-reflective thing we support with appropriate guardrails.

A way to navigate the ecosystem

There is probably room for something which is more basic than any of this that helps people decide whether or not they probably want to give to give well or probably to Coefficient Giving or something else. My model is that for a lot of people, the reason they don’t give more money is because they see the money they’re giving as a significant amount, don’t want to give it away badly but don’t want to put in a huge amount of effort to give it well.

One can imagine a site with buzzfeed style questions (or an LLM) which guides people through this process. Consider Anthropics recent interviewing tool. It’s very low friction and elicits opinions. It wouldn’t, I think, be that hard to build a tool which, at the end of some elicitation process, gives suggestions or a short set of things worth reading.

This bullet was suggested by Raymond Douglas, though written by me.

A new approach

Billionaire Stripe Founder Patrick Collison wrote the above tweet. I recommend reading and thinking about it. I think he’s right to say that EA is no longer the default for smart people. What does that world look like? Is EA intimidated or lacking in mojo? Or is there space for public debates with Progress Studies or whatever appears next? Should global poverty work be split off in order to allow it to be funded without the contrary AI safety vibes?

I don’t know, but I am not sure EA knows either and this seems like a problem in a rapidly changing world.

There is a notable advantage for a liberal worldview that is no longer supreme in that we can ask questions we are scared of the answer or of tell people to return when they find stuff. EA not being the only game in town might be a good thing.

Imagine that 10 new EA-adjacent 100 millionaires popped up overnight. What are we missing?

It seems pretty likely that this is the world we are in, so we don’t have to wait for it to happen. If you imagine, for 5 minutes that you are in this world, what would you like to see?

Can we built it before it happens, not after.

If you wish to fund an opportunity ranking tool or EA community notes, please get in touch. If you like my work, consider subscribing—I’m not paid to write article like this.

“papers? essays?”

"Billionaire Stripe Founder Patrick Collison wrote the following..."

I'm confused, what did Patrick write?

Is GiveWell no longer understood to be doing this?

(Maybe they give up on evaluating 'everything' – that seems like something I vaguely remember them spelling out at some point.)

Would a tool/site you make be only for EA charities or would it also include, e.g. American Red Cross and similar non-EA philanthropies?