Recently I noticed a crux1 with some friends. It’s whether my view of the world should primarily be driven by thinking I respect or by representing views of broader set of people. At its limit, should I do more thinking for myself or take surveys of many individuals.

Examples of the disagreement

Here are some of the kinds of disagreements I seek to highlight:

Katja and I discuss what we each intend to work on next, sometimes wondering whether we should work together. After writing up her thoughts, she often wishes to do more individual research whereas I want her to write the perspective of other public figures, both those whose I feel allied with, say Paul Christiano, and those I don’t, say Sam Altman. Katja does wish to read/critique these people but she is much less interested in trying to sum up their work in a way they might endorse - ie steelmanning.

Our disagreement is whether, on the margin, it is better to do more of one's own thinking vs try to sum up someone else’s.

We also disagree on how to solve this disagreement. In a genuinely funny moment we realised that she proposed to think more about it and I proposed to make a poll and send it round to people she respects. It wasn’t a set up, but somehow we had managed to have an entire meta argument that was analogous to the argument itself.

I have disagreement with Ray Arnold and Oliver Habryka as well. Recently, I published two pieces on LessWrong and we disagree on which is more valuable. The first is about my views on Journalists. It summarises my experiences with perhaps 10 journalists and a lifetime of reading news articles. The other is about the site FindingConsensus.AI, a site I build summarising public opinions on the California bill SB 1047.

To me the latter looks clearly more interesting. Rationalist’s opinions on journalists is not new or deep ground, in my opinion. But trying to surface the discourse unto itself seems a useful thing to do.

Ray Arnold disagrees:

But my rough take is "showcasing who believes what seems... almost anti-valuable to me, because it's reinforcing a frame of social-epistemology instead of 'actually understand and figure it out', which is like one of the major problems with the world.

Habryka agrees:

I thought your journalist post was both more important and more practical than the AI post, and am indeed kind of confused why you would call a website dedicated to what various people whose opinion only really matters in social reality would be “closer to reality”

Later Ray clarified he that his issue was that he didn’t get much value from people’s positions on charts. Summarising their thoughts might have been different2.

Summarising the disagreement

To me then we have a number of conflicts which perhaps turn on the same crux:

Thinking more vs asking others

Listening to thinkers one doesn’t respect vs ignoring them

Representing thoughts of others vs quoting large blocks of their text

Improving tools for thought vs tools of negotiation

An attempt at two frames

I will try to gesture at the two views3, the Academy vs the Wasteland:

It would be easy to see this as an extension of conflict vs mistake theory, but I don’t think that maps well.

The Academy

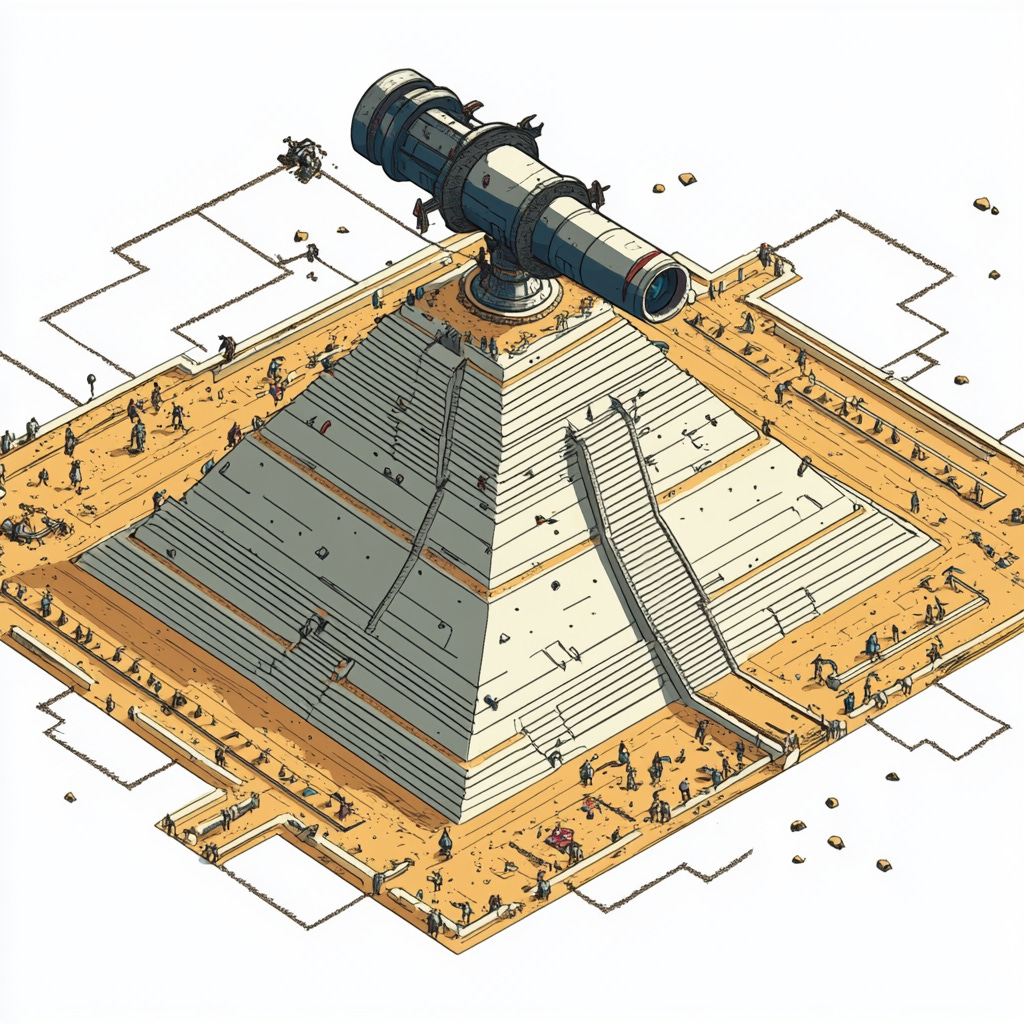

The academy is the view that the search for truth is primarily about better arguments and better thinking. Imagine a monastery with a big telescope on top, covered in hundreds of little telescopes, swarming with scientist monks. They argue with one another about what to think and also, where to point their main telescope. Ie, when searching for truth we can both argue on the specific details of particular claims as well as to what our communities’ views at large should be.

We might see LessWrong here. The aim is to think well about issues. To write about them. To be.. Less wrong. But seemingly and I think Arnold and Habryka argue, they are not interested in what those who they do not respect, think. And even less to plot them on charts. Why would they care? How exactly does that help them think better. For them, the sky is the point, the stars. How are we seeing them better?

Two attempts to steelman my interlocutors:

Firstly, the Academy does have conflict. Internally there might be different positions on a specific issue. Externally there might be multiple academies or political factions using the work of the Academy. It is not that there is no conflict, but that resolving conflict is not the central aim of the Academy - it is finding what is true.

Secondly, this not an attempt to paint the Academy as naïve. If some political apparatchik or enemy warlord enters, the monks are capable of behaving politically to continue their search for truth. LessWrong needs money to function, I doubt the LessWrong boys would let you in if you turned up holding an axe. I am not aiming for a lazy pastiche of naïve rationalists vs. pragmatic Nathans. I am fairly rationalist too.

The Wasteland

My view is that the search for truth is more like a wasteland. As Scott Alexander said

We live in the late pre-truth era

In this analogy we live in pre-modern wasteland with warbands and small nations attempting to hold territory. This territory is the levers of the real world - political power, favourable framings in discourse, legislation. These groups have not yet figured out that trade is a more effective way of doing deals between such groups and so engage in bloody conflicts. For me then, conflict between different worldviews is inevitable and the question is whether it is more or less costly.

In this view, good thinking is hard for any one group to do alone. All have biases and so the best thinking might be a mix of our own and of that warband we hate. Perhap they don’t share our sacred cows and can thinking clearly in a way we are unable.

And that another group is powerful implies they know things about the real world. They have managed to occupy a niche. What did they know in order to do that and what have they learned since?

Likewise, the primary focus on this view might not be thinking better, but listening and or negotiating. If another warband is powerful their view on the truth may become the dominant one no matter what. In that sense, it might do to try and correct the worst aspects. Likewise they might not realise that my group actually is more powerful. In this case, I will end up escalating the conflict in a way I might prefer not to do.

Here, rather than building better telescopes, one might push for the invention of currency, cross border trade, terms of surrender, forecasting the results of battles. All of these might lead to better results than handing out top of the line astronomy gear. The key point is that truth isn’t valuable unless people believe it and so holding ground, survival and conflict reduction are part of the truth-seeking process.

To steelman my view:

It is not that thinking isn’t valuable here, but that coordination matters too. Both as a means for finding truth and for seeing truth expressed in the world.

And this view can descend into soldier mindset - seeing all discourse about truth as a battle for ground. But also it can rise above that, seeing even quite significant conflicts as a space to learn about the views of ones “enemy” with a hope to finding ways to do trading rather than conflict in future.

A quick summary of the frames

Some arguments in favour of each

Each bold statement is a new argument:

Too few people think for themselves. In a world where everyone wants a quick shortcut or a summary, be the person who reads and understands the material.

Everyone already cares too much what high status people think. We are already in a sinkhole of bad thinking, caused by deference to the views of high status people and the attempts of others to become high status. Personally, I can attest to this. I believed Christianity to be true until I was 25 largely on the back of what my clever friends thought about the issues. I would have done better to consider status less and truth more.4

It is necessary to know what others think in order to discuss truth with them. If one aims to convince others it is worth understanding what they think first. A better understanding of Altman’s thoughts on AI risk would be useful for the same reason understanding anti-nuclear advocates is useful. You can find counterarguments and head them head them off at the pass. I use this graph all the time when discussing nuclear energy, but perhaps I wouldn’t have known it was useful unless I had see that many anti-nuclear folks are concerned about safety.

It is worth knowing who is going to win before the conflict. Japan could not, it turns out, defeat the US in WWII. Their failure was not only one of military power but also of forecasting. People debate whether the US needed to drop the bomb, but if Japan had surrendered, they probably wouldn’t have. Failures of conflict aren’t just bad for the loser, they can be bad for all of us.

One of the surprises of the SB 1047 campaign was that the pro-bill coalition was pretty powerful. It included Elon Musk, Anthropic, Mark Hamill, wait what? Entirely focusing on truth-seeking can leave one unprepared for conflict, or at times, overprepared. Dean Ball, who opposed SB 1047, said:

The cynical, and perhaps easier, path would be to form an unholy alliance with the unions and the misinformation crusaders and all the rest. AI safety can become the “anti-AI” movement it is often accused of being by its opponents, if it wishes. Given public sentiment about AI, and the eagerness of politicians to flex their regulatory biceps, this may well be the path of least resistance.

Many do not wish to discuss on LessWrong. Some valuable thinking happens on LessWrong, but for me, other good places are Metaculus and Manifold. Others really like the Motte, the EA forum, twitter. To the exten that useful thinking is happening outside of one space it is worth trying to accurately bring it into other spaces.

Keep the main thing the main thing. There is a strong heuristic on the value of focus. And that what sets truthseeking factions apart is their desire for finding the truth. Many factions think the world is about conflict and winning and they can try to win better. We might be unique in our desire to find out what’s true. To that extent, it is worth focusing on what our comparative advantage is, even under the Wasteland view.

A wasteland in academy clothing. I think it’s sort of plausible that I’m the baddie here. I catch a tone of this in some comments I receive. That I am not actually interested in what’s true, but in winning discussion and status. I think this is plausibly true. And to the man with a desire to build consensus tools, perhaps everything is a conflict?

The wisdom of crowds. It is popular among those of the Academy view that the median is generally more accurate than one’s individual guess, unless one has a very good track record. Breaking down important questions and seeking medians seems like a reasonable way of finding truth. And if well specified, those of very different viewpoints seem ideal people to get to forecast. Prediction markets are even better here, containing the correct incentives and a natural, robust track record system.

What do you think?

Apologies to keep putting out posts without conclusions, but sometimes I don’t know the answers and want to get a draft published.

Does this dichotomy resonate with you?

A hard and repeated problem I have when writing up discussions is that many people have slightly different cruxes and it becomes hard to represent them accurately.

My counter to that is that it doesn’t feel like incentivising me to try if no one is interested in early attempts.

I am not optimistic about these two views landing well, because over time I have learned it’s very hard to understand deeply why I disagree with people. But I think it’s still worth trying

Though it’s unclear whether I should have done more personal thinking or asked people outside of my group, perhaps both!

Coming to the original disagreement: "Our disagreement is whether, on the margin, it is better to do more of one's own thinking vs try to sum up someone else’s."

Presumably there are situations where each is good, and it depends on your goals and abilities. A tough trade-off in my mind is balance between short-term and long-term goals. E.g. if you want to make a quick decision, finding out what others think and synthesising it will be faster than doing first-principles thinking. But, if you always do this, then you do not practice the skill of doing first-principles thinking, so you get stuck in this bad equilibrium. 'I do not think for myself because I am not good at it, and I am not good at it because I do not think for myself.'

Out of the reasons you listed, I weight 'Too few people think for themselves.' highly. In particular, the argument of 'wisdom of the crowds' implicitly relies on having enough people thinking for themselves.

You might enjoy The Square and the Tower by Niall Ferguson