I am interviewing AI experts on what they think will happen with AI. Here is Katja Grace and her thoughts. AI risk scares me but often I feel pretty disconnected from it. This has helped me think about it.

Here are Katja’s thoughts in brief:

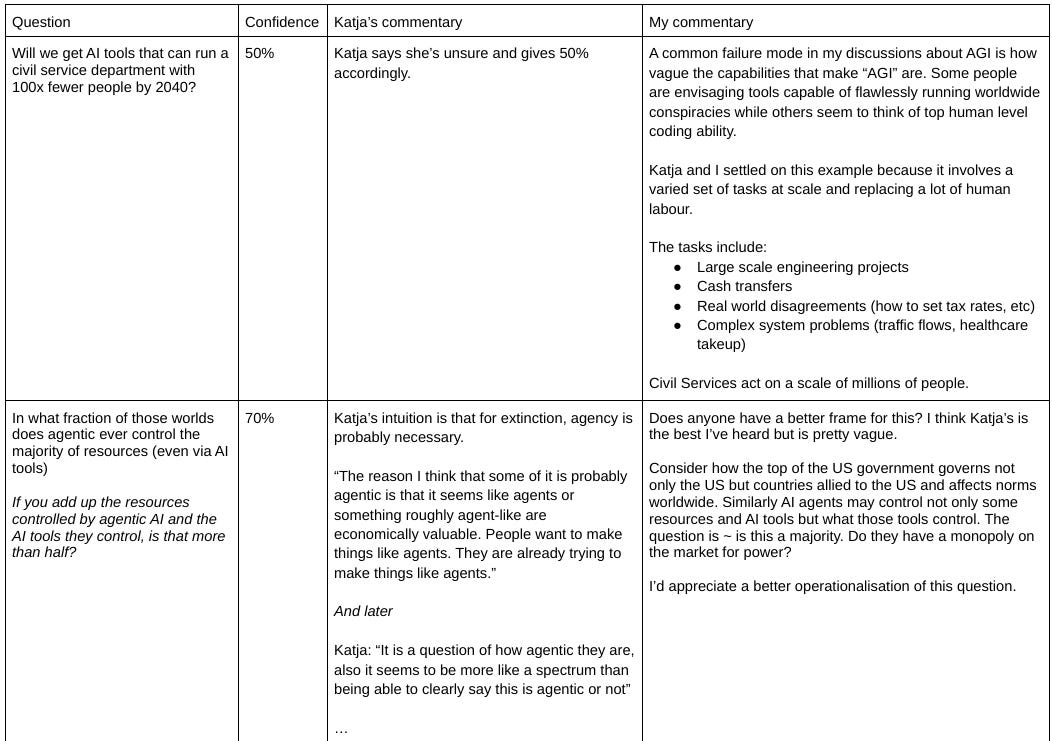

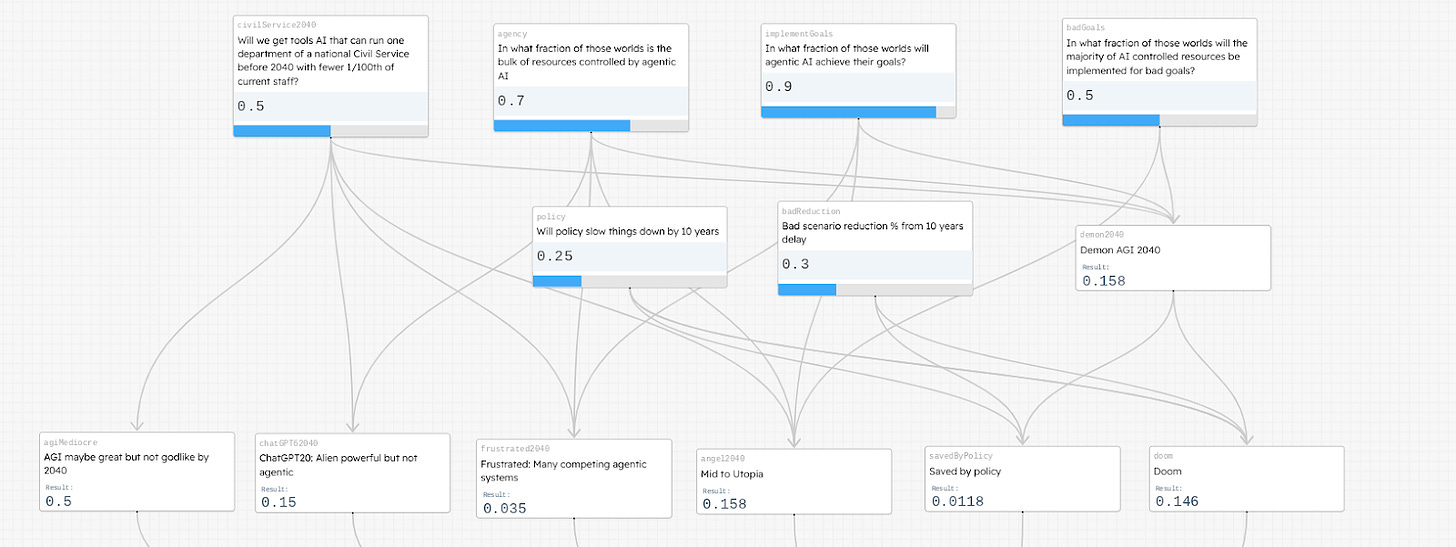

Will we get AI tools that can run a civil service department with 100x fewer people by 2040? 50%

In what fraction of worlds are the majority of AI tools controlled by agentic AI? 70%

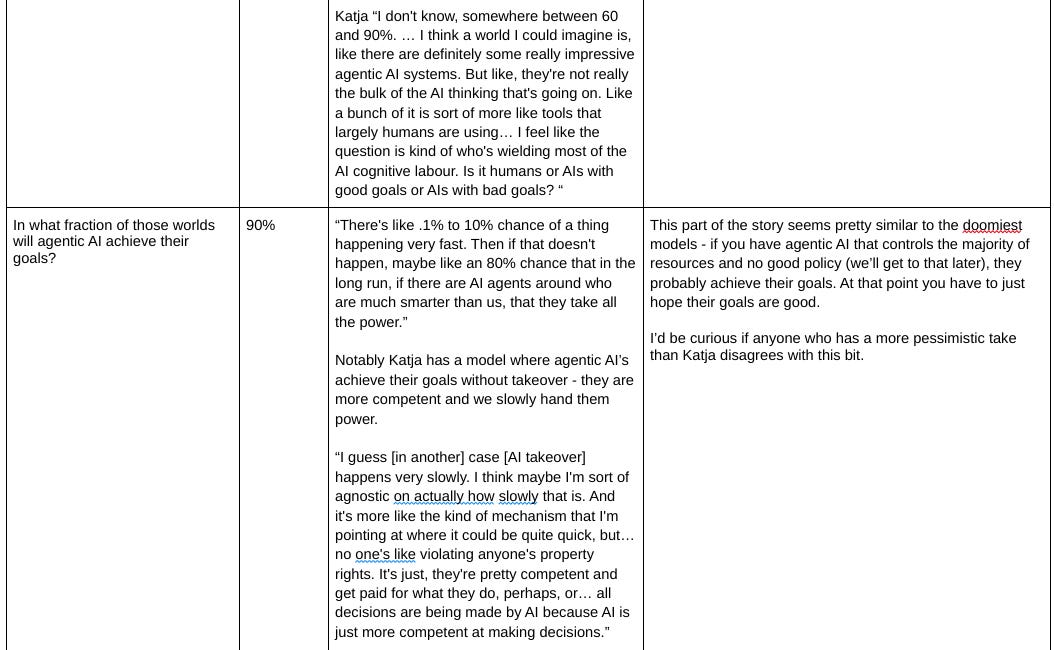

In what fraction of worlds will agentic AI achieve their goals? 90%

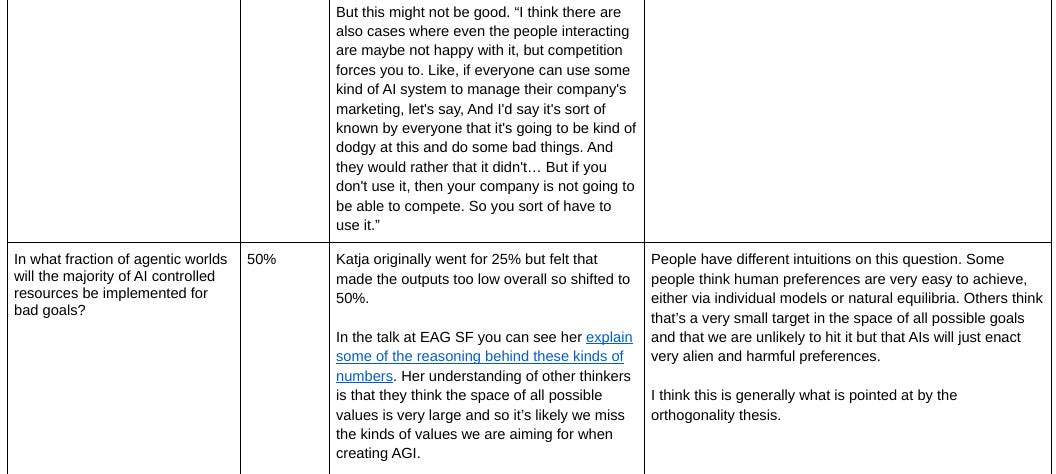

In what fraction of agentic worlds will the majority of AI controlled resources be implemented for bad goals? 50%

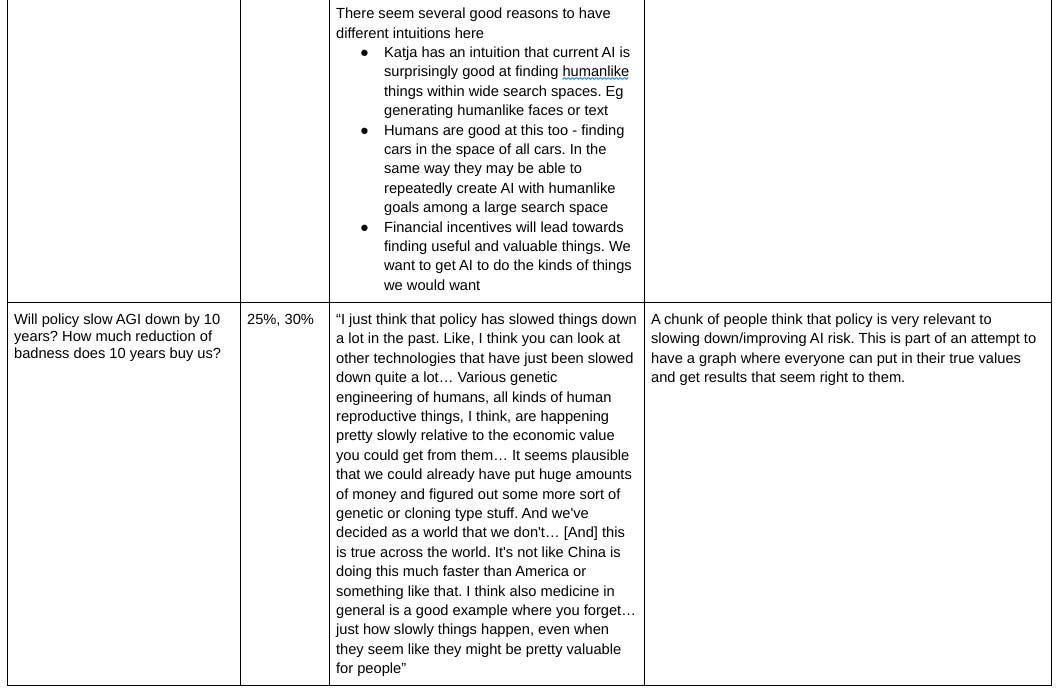

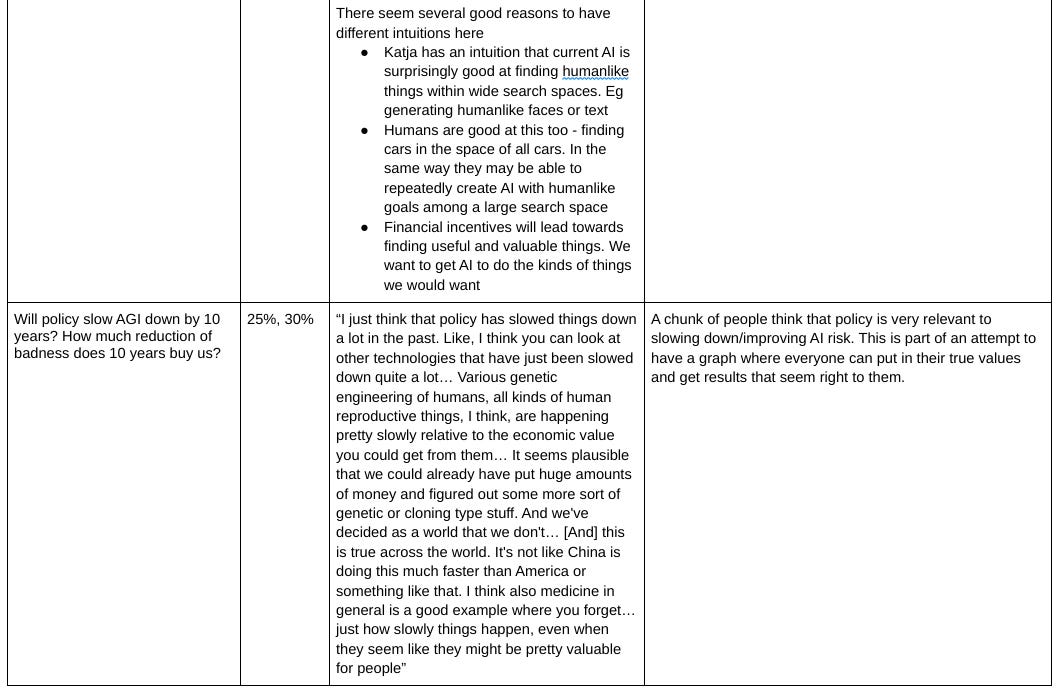

Will policy slow AGI down by 10 years? 25%, How much reduction of badness does 10 years buy us? 30%

You can look at an interactive graph here: https://estimaker.app/_/nathanpmyoung/ai-katja-grace or see all the graphs I’ve done so far https://estimaker.app/ai. There is an image at the bottom of this page.

You can watch the full video here (yes I have a podcast now1):

Longer explanations

Substack doesn’t let me do tables, so I took screenshots, if you dislike that, go here.

How this falls out by 2040

Here is how these numbers cash out in possible worlds. These are Mutually Exclusive and Collectively Exhaustive (MECE)

AI tools are good but not a step change (50%)

AI tools are great. Maybe they can code a lot or support a lot. But they can’t reduce the manpower required to run a department of the Civil Service by 100x. They can’t take on large projects alone, for some reason. It’s like GPT4, but a lot better, but not a step change.

Again, this is by 2040 so it can still contain doom scenarios or any of the other worlds (but just after 2040).

ChatGPT20 - competence but no agency (15%)

Imagine a ChatGPT that can produce anything you ask of it but only does a few tasks or can’t call itself recursively. Unlike the above this is genuinely a step change. You or I could run a hedge fund or a chunk of government. But it will involve us doing the vision.

Many godlike agentic AI systems blocking one another (4%)

As in the current world, many intelligent systems (people and companies) are trying to reach their outcomes and blocking one another. Somehow this doesn’t lead to the “mid/utopia’s” below.

AI midtopia/utopia (16%)

These are the really good scenarios where we have agentic AGI that doesn’t want bad things. There are a broad spread of possible worlds, from some kind of human uplift, to a kind of superb business as usual where we might still have much to complain about but everyone lives like the richest people do today.

Saved by policy (12%)

Amount of worlds where things would have gone really badly but policy delayed things. These might be any of the other non-doom worlds - perhaps AI has been slowed a lot or perhaps it has better goals. In order to simplify the graph, it doesn’t really deal with what these worlds look like. Please make suggestions

Doom (15%)

Unambiguously bad outcomes2. Agentic AGI which wants things we’d consider bad and gets it. My sense is that Katja thinks that most bad outcomes come from AGI taking over, maybe 10% it happening quickly and 90% it happening slowly.

If you would like to see more about this, Katja has much longer explanations here: https://wiki.aiimpacts.org/doku.php?id=arguments_for_ai_risk:is_ai_an_existential_threat_to_humanity:start

Visual model

https://estimaker.app/_/nathanpmyoung/ai-katja-grace

Who would you like to see this done for?

I wanted to see work like this, so I thought I’d do it. If you want to see a specific person’s AI risk model, perhaps ask them to talk to me. It takes about 90 minutes of their time and currently I think the marginal gains of every subsequent one are pretty high.

On a more general level, I am pretty encouraged by positive feedback. Should I try to get funding to do more interviews like this?

How could this be better?

We are still in early stages so I appreciate a lot of nitpicky feedback

Thanks

Thanks to Katja Grace for the interview and Rebecca Hawkins for feedback, in particular for suggesting the table layout and to Arden Koehler for good comments (you should read her piece on writing good comments). Thanks to the person who suggested I write this sequence.

Available on youtube, spotify and pocketcasts as “Odds and Ends”

P(doom) is good to start a discussion, but you want to quickly move to what would change that value. By the time we learn whether we are right or wrong on doom, it is too late. Better to try and find cruxes earlier.